AWS S3 is quite literally the oldest AWS service, having come out March 14, 2006, and it was the first generally available service on the AWS platform. Since its release, we’ve seen S3 continue to be a major core service used by nearly every AWS customer we’ve worked with.

One of the best cost saving methods in S3 has been utilizing lifecycle policies to rotate data into cheaper storage classes. The major problem users face with lifecycle policies is needing some idea of their usage patterns. Sometimes, incorrectly configured lifecycle policies cost more than they save. For example, rotating objects to Glacier only to have them accessed the next week can cause significant charges and outweigh any savings. In this scenario, that would be both the charges for retrieval and a 90-day minimum storage duration.

We’ve seen this fear of Glacier charges so prevalent amongst customers that several choose to only use Standard Storage and Infrequent Access (IA). Some customers can be so unsure of storage patterns that they choose to not even use Infrequent Access due to the minimum 30-day storage duration charges associated with moving or deleting data early.

S3 Intelligent tier (INT) has been a growing solution to these pain points. For a small per object overhead fee ($0.0025/1000 Objects in all regions), this storage tier will switch each individual object’s storage class based on access patterns.

Amongst our customers, we only saw minor usage of Intelligent tier starting in February of 2020 and steadily increasing through 2021. Although limited in features at that time, there was still benefit in having objects being monitored and transitioned automatically to IA after 30 days of no usage, even with the risk of minimum storage durations.

Re:Invent 2021 is when we saw significant game changers in S3, including updates to Intelligent tier and the split of Glacier into both Instant and Flexible. Below is a quick history of Intelligent tier storage. Even though it’s been around for several years, we’ve only recently seen a significant adoption of this awesome automatic money saver.

- November 2018 – Intelligent tier announced

- November 2020 – Added Support for Glacier and Deep Archive as Opt-In

- September 2021 – Removal of minimum storage duration and removal of overhead charges for objects under 128KB

- November 2021 – Added Archive Instant Access tier

We saw Intelligent tier’s usage more than double shortly after the September 2021 update when Intelligent tier took a big leap in becoming a major savings opportunity for customers. We saw continued increase in usage, as well as savings, once Archive Instant Access (AIA) was added. Intelligent tier is now a strong recommendation we make to our customers. We generally see around 30% savings with customers, but see some push 50%, or even over 80% in savings.

Intelligent tier has become much more viable of an option since September 2021 and customers are taking notice. Although there are some easy and significant savings available with Intelligent tiering, it’s not meant for every use case. We’ve seen customers jump at Intelligent tiering without analyzing their objects first, and sometimes this leads to expensive transition costs or realizations that it’s just simply not the right class of storage for that bucket.

AWS often advertises Intelligent tiering for data with unpredictable or unknown storage patterns, and this is really where the tool shines. Instead of having to play it safe and leave everything in normal Standard Storage, there are now several reasons to consider Intelligent tier.

Although it may seem like a magic solution, there are a few things to keep in mind before switching to Intelligent tiering:

- Small objects (128KB or less) will permanently be charged at Frequent Access tier pricing

- Where storage patterns are well known, Lifecycle Policies may be more cost-effective

- Initial transition fees can be an important cost consideration

- Monitoring & Automation costs tend to be very minimal but can tip the scale towards another solution like Glacier in some cases.

- If data is already Infrequent Access, understand Frequent Access will be charged for 30 days upon transition to Intelligent tier and factor in this initial increase.

Below are some examples from actual customers who decided to use Intelligent tier.

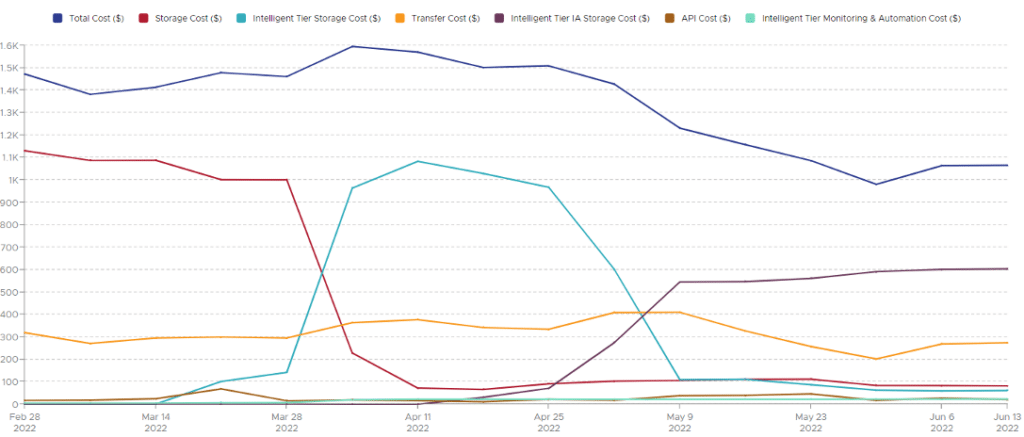

Customer Example #1 – ~33% savings

Customer’s weekly spend – The Customer was only using Standard Storage with unknown access patterns. Enabling Intelligent tiering led to very nominal API and Monitoring & Automation costs. Overall cost of around $1.5K/week reduced to $1K/week. Further savings are expected at the 90-day mark for Object rotation into INT-AIA Storage.

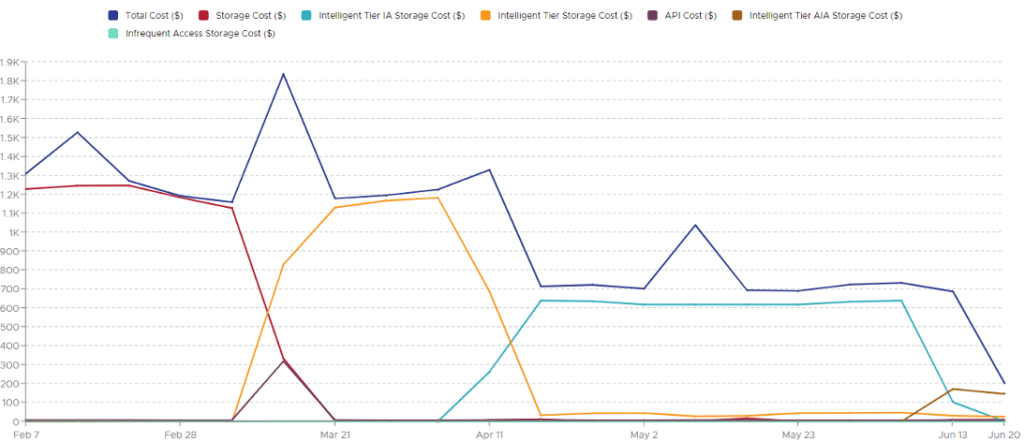

Customer Example #2 – ~50% savings, and then ~85% savings

Customer’s weekly spend – The Customer was only using Standard Storage with unknown access patterns. Enabling Intelligent tiering led to very nominal Monitoring & Automation costs and a small $320 API charge for the initial transition to Intelligent tier. Overall cost of around $1.25K/week reduced to $680/week after INT-IA kicked in after 30 days, and further down to $205/week once INT-AIA storage cycles in at 90 days.

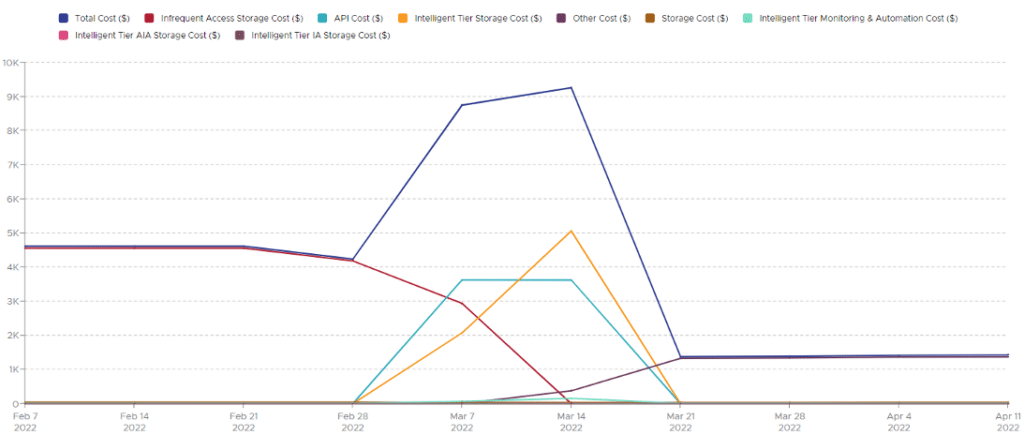

Customer Example #3 – Intelligent tier not efficient, switched to Glacier Instant

Customer’s weekly spend – Filtered to just a single bucket that was already 99% IA, we can see an overall cost increase moving Objects to Intelligent tier since the objects would be classed as Frequent access for at least 30 days before returning to INT-IA. A further 60 days would be required for INT-AIA. Also, about $900/mo in Monitoring and Automation costs would apply.

The customer did not do pattern analysis before this change. After running Storage Analyzer on the bucket, we found only about 2GB/day was being accessed of 1.5PB. Meaning Glacier Instant was the best solution for the customer. This saved the customer from having to wait 90 days for transition to INT-AIA, as well as eliminated the $900/mo Monitoring and Automation costs.

NOTE – “Other Cost” on this chart are the costs for Glacier Instant Access

From these examples, we can see that Intelligent tier has a lot of potential to significantly lower costs on buckets with unknown or inconsistent storage patterns. However, we can also see a case where not analyzing storage patterns first caused the customer to pay extra API costs and have a less cost-effective solution. Keeping the above considerations in mind, it’s clear that Intelligent tier is a very valuable tool to have in any cost optimization discussions.

For other cost savings tips, we at Converge offer a Cloud Advisor service made up of experts in AWS, Azure, and GCP. We’re happy to provide a free trial period of our service, which includes a monthly cost optimization report, dedicated Cloud Advisor, and insight into your environment to drive costs down on your monthly bill.