The world is talking about ChatGPT, large language models (LLMs), and other modes of artificial intelligence. So much so that I believe we’re witnessing what will be the zeitgeist of the 2020s. Simultaneously amazing and terrifying, these tools have everyone wondering what they mean for the future.

The rewards of ChatGPT and similar tools are relatively easy to understand—tasks done quickly, easily, and possibly better. On the other side of the equation are the risks. That’s my vantage point for this article. Using ChatGPT and other LLMs in a corporate environment can introduce vulnerabilities that expose organizations to data disclosure and system compromise.

Easy to hack

Generating realistic-looking phishing emails that bypass email filtering controls has never been easier with ChatGPT. The same goes for malware that evades endpoint protection products and other scenarios that avoid security controls. There are guardrails in place in ChatGPT but training AI to dodge security is easy.

I’m not saying AI is easy to hack as a blanket statement. But in my personal experience, whenever I ask ChatGPT to do something for me, if the safeguards notice that I am talking about hacking, I just need to contextualize that what we’re doing is ok or rephrase slightly, and it usually goes along, spitting out what I asked for. I see ChatGPT as my own personal Yes Man from Fallout: New Vegas.

Source: Fallout: New Vegas – Honestly, that’s the basic idea of almost every escape I’ve seen so far.

Truthfully, collections of bypass prompts are shared almost daily (including one named Yes Man!) because many users aren’t happy that limitations are placed on what it is or isn’t allowed to help with, even if those safeguards were created with good intentions to curb racism, sexism, and the spread of dangerous information.

I get that those seem like justifiable limitations. But at the same time, those of us who do offensive work and have legitimate reasons should be able to ask it to bypass things a bit. Granted, validating what is and isn’t legitimate is problematic, so for now I appreciate that it’s easy to bypass.

Wait, who owns those corporate secrets now?

Using ChatGPT to create content, code, and other output for use in products, services, or processes is risky business.

For anyone who inputs corporate data for use in ChatGPT, I have some bad news: You may have personally signed over ownership of that data to be used for training by OpenAI when you pasted it into their web interface.

Surprised? Not convinced? Well, there was a big hullabaloo when it happened to Samsung earlier this year, and you can get more details by checking out the original source material (use Google translate to help out.) To OpenAI’s credit, they updated their Terms of Use, but let’s just put on our armchair lawyer hat for half a minute (obviously, I am not a lawyer; do not take any of this as legal advice; talk to your own lawyer if you’re concerned, etc.), mix in a little internet sleuthery, and read a little bit of the OpenAI Terms of Service.

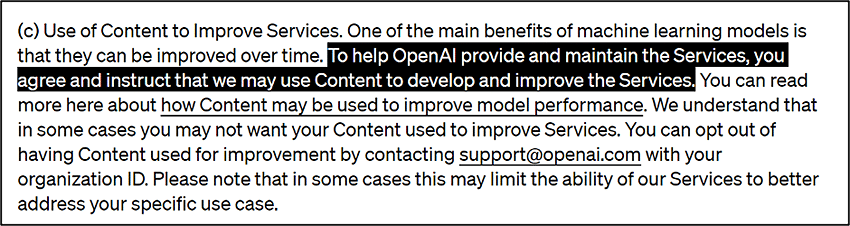

This is what OpenAI’s Terms of Use page looked like on February 27, 2023. This happens to be the first date this page was copied by archive.org. A small excerpt is included below (highlighting is mine):

Here is the way it looks currently at the date of publication. (Again, highlighting is mine.)

Well, that’s an interesting and clear change. Today, if you’re paying for and accessing via the API, they’re not using your data for training, but if you’re a non-paying person using the website to talk with ChatGPT, every question and every response may be going into training data because it’s all “Content.”

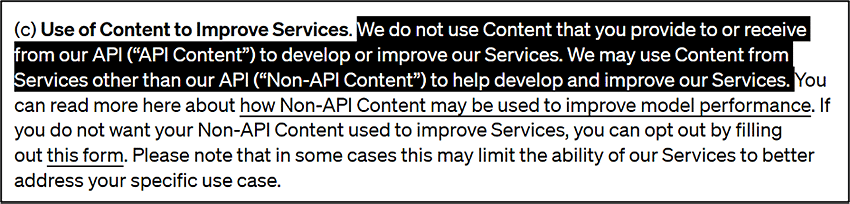

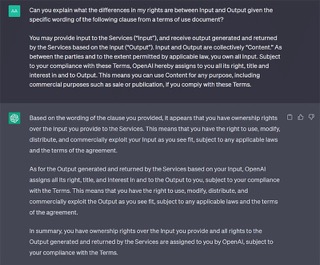

Now, to their credit, both copies I linked offer a way for users to attempt to opt-out, either via email to their support address or through an included link to a Google Forms page. In an earlier section in both versions, they note that the Content is all yours, and certainly not theirs, though using what strikes me as very specific wording.

Oh hey… ChatGPT, I know I’ve been talking a lot of smack, but… you passed the Bar exam, right? Can you take a quick look for me, pretty please?

Cool, so both input and output are yours as long as you don’t break the Terms of Use, the same Terms of Use that OpenAI can change at any time, for any reason, without any notification or cause…😐

But there is also that other section that states that OpenAI must log “Content” for legal purposes, so they’re keeping a copy of input data regardless. Depending on personal security preferences or corporate security policy, some policies may need to be established for what can and can’t be put into ChatGPT’s web interface.

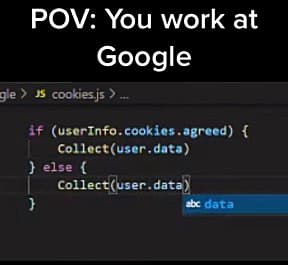

With logging, if ChatGPT or another LLM-based AI solution is compromised, so is your data. In fact, that scenario has already happened. Personally, I view and trust ChatGPT as much as I trust Google Chrome not to log everything I do.

Pure speculation, but don’t we all assume this?

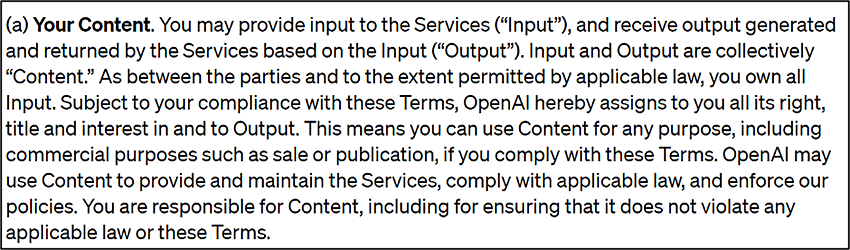

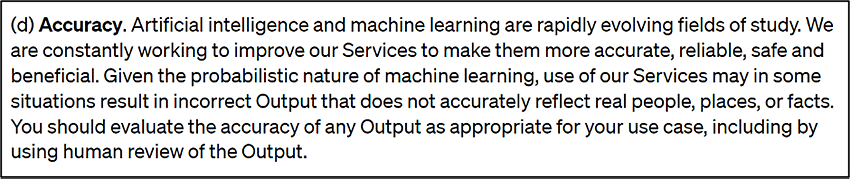

This isn’t a law piece, and I am thankfully still not a lawyer, but I find it interesting that the section below wasn’t in the original Terms of Use but added at some point.

So, in the world of ChatGPT, those hallucinations you’ve been hearing about are entirely normal, part of the iterative process, and to be expected. The takeaway for anyone using ChatGPT is never to trust anything it says because it makes things up and to always have a human look at the output before using it for anything. This limits its usefulness in automation or customer interactions unless strict checks on inputs/outputs are implemented. These checks would essentially amount to the equivalent of spam filtering rules/systems for AI-based virtual agents, both on input and output.

Fighting ChatGPT-fueled adversaries

The worst-performing AIs you will ever deal with are the versions available right now. Setting protections in place now makes it easier and less costly to adapt as it changes.

- Invest in perimeter, email, and endpoint security solutions with a proven track record of detecting and preventing malicious activity.

- Have an incident response plan in place in the event of a breach and test it regularly.

- Routinely perform penetration tests against security controls and consider more advanced testing, such as ransomware assessment services, to identify gaps that threat actors could exploit.

ChatGPT and employees

- To prevent employees from accidentally sharing corporate secrets or sensitive data on ChatGPT, add blocks to your web filter and/or next-gen firewall for “chat.openai.com.” That should help prevent users from directly communicating with it over the standard web interface.

- You can block more of their domain if you’re uncomfortable with your employees potentially using the API or other interfaces or tools of OpenAI.

- The previous two measures are not a complete fix, with tools being created to abuse third-party party services using OpenAI. You can review this repository for domains that should be blocked if you want to prevent more technically-minded users from accessing the service via proxy.

- Remind managers and anyone doing document reviews to look for anything that appears to have been written by AI instead of a person.

- Create policies and procedures regarding the internal use of AI and any guardrails that apply. Employees need to know which, if any, AI use cases are acceptable. Make this part of corporate security training.

- Update security training materials to include information on deepfakes. You can also consider creating verbal passphrases or other MFA measures for additional verification to address concerns about someone’s face and/or voice being cloned. Tip: Ask the person on the video to move around, use their hand to cover part of their face, or to tilt their head 90 degrees to one side. Most face-swapping software fails almost immediately with these, usually in weird ways, especially if too much of the face is covered or if the face turns too far. Eventually, this software will advance to handle these tells, but it’s decent advice for now.

ChatGPT and code writers

Coders need the information above plus these additional instructions:

- Educate your team about code generated by tools like ChatGPT and how it may re-create licensed code. Recommend that they look for the generated examples online before using them directly.

- Ensure all AI-generated code is reviewed before putting it into development or production.

- Don’t allow corporate source code to be pasted into the chat interface if there are any concerns with it being shared publicly. If your developers want to use ChatGPT for debugging, changing parts of the code or giving the AI tool as little context as possible can help, but ultimately, some code still leaks this way.

Using AI in an application or product

Take the instructions above and add the following:

- Developers need to consider how using AI can go wrong and ensure filters on input and output from the AI help reduce incidences of inappropriate or unintended behavior. This reminder should be part of regular secure coding training. You don’t want your program to be the next Microsoft Tay!

- Take exceptional care with any user input. Issues here can lead to multiple types of failure in more complex systems. This can include modifying the AI behavior through prompt injection to perform SQL injection, information disclosure, authentication bypass, and possibly many other classes of vulnerabilities, depending on where the data is going within the application.

- Ensure your test data is as diverse, varied, and representative as possible when training systems. If the systems interact with humans, do testing with people of all ages, colors, nationalities, gender identities, and more. Diversity is essential to avoid an AI trained for a limited group.

- Use caution in any situation combining AI and robotics. Know how the systems will fail safely and avoid any chance of harming humans, animals, the environment, and anything else the machine may encounter out in the field.

- Consider the societal impact of the project, who could be displaced, and what negative impacts are possible. Mistaken assumptions based only on an ideal situation could cause harm.

Getting ahead of the AI curve

ChatGPT and LLMs are in their infancy and will grow more prevalent and capable. AI has huge potential in many contexts, but there are security risks to consider with its use.

Be proactive and get ahead of the curve now to mitigate potential risks. Develop and implement usage policies and processes to enable users to leverage these tools securely. Use regular testing and assessments to ensure that existing policies and processes continue to mitigate threats according to your business’s risk appetite and aligned with AI’s advancing capabilities.

For consultative help in protecting your sensitive and critical data from ChatGPT and other potential misuse and leaks, reach out to our expert team today.