The Next Wave in Generative AI: Trends and Updates

In the last year, generative AI (GenAI) has exploded into the marketplace, the media, and the tech industry. It’s moving and changing more quickly than anyone could’ve guessed. And for good reason: the technology itself is packed with game-changing potential, showing inventiveness and creativity that’s shockingly sophisticated. Generative AI and large language models (LLMs) produce human-level expertise and content output derived from statistical, self-learning models based on massive quantities of data. They create impressive new outputs, from stories and articles to audio and video, and even functioning computer code.

Most in the industry see the real potential: new modes of working, big cost savings, and captivating new revenue streams. The potential for big business value is bursting out of these technologies and the industry sees it. Many players are investing massive amounts of capital to move generative AI forward, and there are hundreds of models released into the market. It’s happening so quickly that the landscape changes by the day.

Interested? Feeling like you need to jump in? Or at least wondering where to begin? You are not alone. But strap in because things are moving quickly.

Some basics: GenAI concepts

Simply put, from a user’s point of view, generative AI solutions are tools able to autonomously create brand-new outputs (e.g., text, photos, videos, code, data, etc.,) from a written request. In short, it is an artificial intelligence that generates.

For example, I may ask GenAI to plan my dream vacation. I simply put my request into the tool via a plain English prompt, such as “I want to plan my dream vacation. Can you suggest an exciting destination and help create an itinerary?” and the AI will return a meaningful, intelligent, human-sounding response with the information you are looking for. In this example, the AI suggests a trip to Japan, gives some explanation, and provides a feasible itinerary.

In the same example, you could ask it to generate an entirely new (but fabricated) photo of what you may see. Or you may ask it for a translation from Japanese to English.

Powering this is complex math that has billions or even trillions of variables within the LLM. An LLM is a generative AI that uses a model that can take in some text (a prompt) and predict the correct response by finding statistical connections between tokens (tokens in this context are data made of a word or fragments of words) or word combinations in massive data sets. These models come in different varieties. There are base models that are general and there are chat models that simulate human conversation. More articulated GenAI models understand text, audio, images, video, code, etc., and can translate between format types. An LLM may be trained on over a trillion tokens and would be the data equivalent of a human reading and retaining the data in 10 million books.

Prompt engineering

To use these models, people use plain English text to prompt the model to provide information.

This requires prompt engineering. Prompt engineering refers to the process of crafting prompts or instructions you give to the AI model in such a way as it elicits a specific desired response or behavior. This can include crafting prompts that reduce ambiguity, mitigate bias, or steer tone and style. Adapting prompts can also be used to fine-tune pre-trained models or customize their behavior for specific applications or domains.

For example, the prompt “summarize this article on climate change” may adequately provide the answer you are looking for from the AI. But you may be more creative or specific, such as asking “You are a climate expert. Can you provide a concise summary of the main points in this article about climate change? Ensure that a high schooler could understand it.” With the fuller prompt, you will most likely get a better response that is tailored to your specifications.

Retrieval-Augmented Generation (RAG)

Counterintuitively, most AI models do not store your data nor is your data training the model. The AI may take your prompt to provide the answer, but the underlying model doesn’t change. You’re simply using one type of model to retrieve your data and another to summarize it. Depending on which LLM you are using, though, and according to their terms of service, some models may take your response and the output and leverage them to improve the AI’s performance through retraining the model.

The LLM still provides custom, specific value to the user without storing their data. The most common way is Retrieval-Augmented Generation (RAG). RAG combines two types of models: retrieval-based models and generative models. First, using the user’s prompt, the retrieval component finds relevant information from a knowledge base. The question will be translated into a mathematical representation by a language or tokenization model. The retrieved information is then inputted to the generative model as a “data sandwich” of the initial question, the internal query output, and the tuned prompt. This enables the generative model (or LLM) to summarize the retrieved content. This is what allows an LLM to answer questions using your data.

The RAG process

Can I train an LLM on my data?

While you may be able to fine-tune some aspects of a model, creating a new LLM is a huge lift. You may need one or two trillion tokens, but sometimes as much as 30 trillion tokens. For reference, the entire Library of Congress has about 2.4 trillion tokens of data. To train the model, it takes thousands of GPUs over the course of weeks or months to complete. Fine-tuning a model, on the other hand, can be done by taking a thousand to a hundred thousand different examples from your data and using those to fine-tune the outer layers of the existing model. It may take a few GPUs and it may take days or weeks simply to fine-tune.

***

Lightning updates – AI news to make your head spin

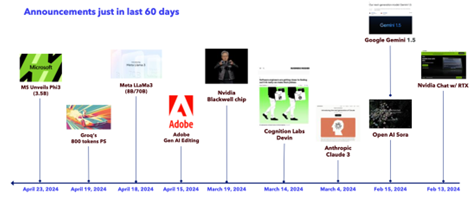

Things are moving ridiculously fast in the GenAI space. For example, in the past 60 days there has been a major AI announcement from a significant player nearly every week. The chart below shows major announcements from February to April 2024, highlighting big news from the likes of Microsoft, Meta, Nvidia, Adobe, Google, and others.

Updates to models

Models are getting updated more frequently and there are many in the market. There are over 300 models out in the market right now by 50 or 60 reputable providers. The biggest updates to the AI models themselves include:

- Getting trained on more data.

- Getting bigger in size. For example, in 2022 ChatGPT had 20 billion parameters. New models are now pushing two trillion parameters, with some models are going way past the two trillion token mark. Meta’s Llama 2 had two trillion tokens where Llama 3 has 15 trillion tokens. Google’s Gemini accesses a whopping 30 trillion tokens.

- Some models are getting smaller (slimmed down) depending on their use cases and where they can be computed (e.g., such as a laptop), but are performing as well as larger ones in their specific domains.

- Open-source software (OSS) models are emerging, democratizing access to AI innovations to larger groups of developers and users.

- Domain models present a new opportunity to tailor AI for specific industries and applications, such as having specific AIs for law, medicine, science, etc.

- Multimodal models are becoming more available. They integrate diverse data types for richer AI understanding, working with text, image, or code and can translate in between each other (e.g., text to image).

- Autonomous agents are empowering AI systems to act and learn independently. They make real action such as executing code, making SQL queries into a database, etc., versus just providing textual answers.

- Models are getting better in performance and accuracy as they continue to get trained and poor responses are getting vetted out.

Updates on data

As AI models consume more and more data, some are finding new use cases while others are running into problems with ownership and data access. For example, Getty Images relies on the proper use of their images for licensing revenue. They used AI to identify terms of service violations by modelling images with digital watermarks, the technology they use to control the rights of images they own and sell. In another example, OpenAI have claimed to have run out of data as it consumed so much. In their quest to find more data, they turned to audio feeds to mine conversations on YouTube.

Updates on hardware and infrastructure

AI tools perform better with specialized hardware and infrastructure that is suited to its processing needs. AI also requires a lot of compute power. Currently, NVIDIA owns the market on GPUs for AI and inference. AMD is catching up and making investments. Groq has announced a language processing chip (LPU) that is designed for inference. They have a chipset and a cloud offering. Microsoft and Apple are both getting into the AI and hardware game. There’s a push to achieve inference using smaller models so they can be run on personal hardware, such as laptops or mobile devices.

On the infrastructure and server front, providers such as AWS, Google Cloud Platform, Azure, and IBM are all offering infrastructure for AI models. Each are constantly changing and improving their offerings. Things are changing so quickly that if you don’t see what you need now, wait for a while and it will likely be available in the near future.

Updates on regulations

Governments are wary of the potential dangers of AI, particularly when they sense a violation of human rights or potential crime, especially in areas such as privacy, misrepresentation, fraud, or simply acting unethically towards human users. For example, the new EU AI act has passed, which prohibits AI to do certain activities that violate fundamental human rights, such as social credit scoring, emotion recognition, vulnerability exploitation, facial data scraping, and biometric categorization, among others. They have identified “high-risk AI” uses, such as use in medical devices, vehicles, elections, and critical infrastructure. They’ve also identified categories of limited risk AI and minimal risk AI, such as AI’s use in video games. As AI use becomes more prevalent and powerful, more regulation is likely to be enacted.

***

Opportunity for GenAI and where to start

The promise of AI technology is that it is going to save billions of dollars as well as create new, tremendous economic opportunity. In a recent McKinsey study1, it is predicted that AI will produce massive productivity gains across key business functions. For example, they predict around a $404 billion productivity gain for customer care and $485 billion gain for software engineering. They also see big gains in marketing, R&D, sales, and retail/CPG. These big opportunities might also be good targets for a company looking to get started with AI.

For example, nearly every company has someone performing some level of customer support. Using these models, you can take text information (even if it originates as voice) to ask questions and then provide answers, either directly serving customers or providing frontline agents with the information they need quickly. Simple requests will be answered faster and more accurately. In customer care, employee churn and training are persistent issues. AI will vastly improve the employee experience, thereby reducing churn and making training quicker when new staff needs to be onboarded.

Software engineering and application modernization are other big opportunities. Software constantly needs new code, updated code, code conversion, artifact creation, testing code, etc., and AI can quicken these requirements by creating new code instantly, giving the human engineer a fast and high-quality starting point.

Selecting a use case to start with should focus on value and feasibility. If the AI solution doesn’t generate revenue, increase productivity or quality, or reduce costs, then it should not be selected. The use case should also be achievable with today’s technology and the organization’s readiness in terms of data, applications, skills, etc. A use case that is too difficult, too expensive, or will take a very long time to implement will increase the chance of failure. Some best practices in where and how to start with generative AI include:

- Start with ROI and value creation. Look for big value.

- Understand what your employees are doing now. Let them discover needs within your business.

- Invest in AI literacy and enablement.

- Implement looking towards the future. Build foundational capabilities that can be expanded or built upon in the future.

- Establish a good set of methodologies for use-case discovery, vetting, and prioritization. Be selective about the first GenAI use case. Start with quick wins that are non-controversial and build momentum. It needs to either make money or save money, otherwise it probably isn’t worth doing.

- Establish a framework for build vs. buy. For example, MS office comes with Copilot, so experimenting here can provide immediate value with very little deployment risk.

- Invest in building domain innovations for your customers and employees and not in building technical patterns.

- Create strategy around responsible AI with continuous evaluation of new tools and approaches to mitigate risks.

- Constantly evaluate and learn.

- Ensure that there is a “human in the loop” to vet GenAI output at first, while exploring ways to automate and scale. Find weaknesses and what can be improved.

***

Generative AI presents enormous opportunity for business value. The challenge today is that it is so new and so hyped that most companies aren’t sure where to begin, much less place big bets. Converge can help. We offer two different workshops where we explore your AI opportunities and priorities and our experts help drive clarity to meaningful, actionable first steps. Our AI Pre-flight Plan is a short, multi-hour exploration where we educate you on AI opportunities and explore some potential first projects and quick wins. Our Design Thinking for AI Workshop is a more intensive, multi-week workshop where we can help you dive into more detailed functionality, build workable plans, and flesh out the benefits to an ROI level.

The next wave of generative AI is upon us, and before you blink, the next wave will be too. Converge can help you avoid drowning in data. Connect with us today.