The excitement for GenAI mostly orbits around the uniqueness and power of the models themselves. But they don’t exist in a vacuum. To build a GenAI-powered business app, you need more than just the LLM. An entire AI-driven architecture is needed to support them.

Exploring the Architecture Behind GenAI-Driven Applications

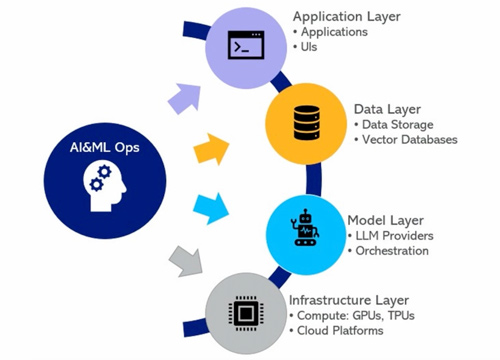

Consider this high-level illustration of the architecture that would apply for this year/this month in the current GenAI environment, but one could imagine how complex it will get over time as AI continues to develop.

This view of GenAI architecture can be relevant in several ways. First, it illustrates the dependencies and elements that successful AI business apps (i.e., those that are used by customers or employees) require. Second, it shows what an IT-centered AI app requires, such as AIOps and observability platforms. Third, and most important to this discussion, it shows the various areas that need to be visible and measurable by AIOps and observability tools.

It starts with the infrastructure layer, which needs hardware to provide the compute power for AI apps, including specialized GPUs and TPUs, and will likely be using cloud platforms. Next, the model layer provides the LLMs themselves, which may include AI solutions like Watsonx, OpenAI or Hugging Face, and may need an orchestration and service API engine, such as Llama Index or LangChain. You won’t be able to use AI unless you can access large quantities of data. That means managing your data stores and presenting your model to the data via vector databases, such as VV8 or Pinecone. Finally, you have the applications layer, which uses everything below in the architecture to provide services to your customers.

AIOps and Observability: The Key to Managing AI Complexity

What the image above shows is that AIOps is more than just the LLM model; it’s a complex architecture. To gain visibility, explainability, and new levels of monitoring and measurement, you’ll need a next-generational observability platform and a next-generation AIOps platform. The AIOps will give you visibility to the high-performance computing infrastructure, and it will look at hyperscaler performance and how the LLMs are consuming cloud resources. You’ll need to understand response time and throughput to make sure the LLMs are giving the responses you are expecting in terms of speed, functionality, and accuracy. The AIOps will enable monitoring of the data storage layer or vector database layer and oversee the applications to measure their reliability and efficiency.

Using Data-Driven Insights for Optimization and Performance

SREs can take advantage of the data points AIOps and the observability tool provides, which will help them find opportunities for cost savings and optimization. Looking at the performance and outputs of your LLMs also helps your data scientists and data engineers see how the model is performing and figure out improvements. Observability gives you the visibility to know when to tweak or retrain your model. If you integrate observability with AIOps, you’ll be able to track incidences and have the tools to fix them without having to go to multiple screens/locations. The SREs can identify the root cause, remedy issues faster, minimize downtime, and, more often, increase or ensure performance.

The final part of our “Observe and Operate Your GenAI Architecture” series covers best practices for successful AIOps Implementation. If you missed the first part, read Part One: The GenAI Revolution.

This article is adapted from a presentation by Manoj Khabe, Converge Vice President, Observability, AIOps and Automation, and Brian Zied, IBM Senior Automation Technical Specialist. View a recording of the presentation here.