I recently sat down with Converge’s Vice President of Engineered Solutions Darren Livingston and Team Lead for HPC Solution Architecture George Shearer to discuss how the rise of artificial intelligence (AI) is impacting our clients’ needs around networking infrastructure.

With decades of experience in the IT industry, Darren and George manage the design and implementation of high-performance computing (HPC) networks. Today, they offer key networking insights and considerations based on their work with a wide range of clients and use cases.

How is GenAI changing our clients’ networking needs?

Darren: Generative AI has pushed networking. And I mean, George, I think you’d agree with this, when ChatGPT came out, and it’s using GPU memory and with the training of models, the days when one or two GPUs in a server would suffice are gone. Now, you’re talking about having racks full of servers with eight GPUs or four GPUs, and the whole world of models has just exploded in the last two years.

It’s all about the backbone, and the backbone of the generative AI movement is definitely networking: low latency, fast, just push and push, and all the time the problem is the more data that goes into these models, the more networking is needed for it. You can start small, but then every time you want to do something, you constantly must be observing and making sure the networking is able to support those workloads.

George: Most of my customers understand how to manage conventional enterprise networks, so I find it useful to contrast the differences with HPC networking. Ethernet is the workhorse in almost all conventional data centers and is required to provide transport for multiple services: voice, video, data, etc. The primary function of these networks is to provide users (who may be local or remote) with access to company resources and data. There are of course many others, such as backups, replication, etc. But in HPC data centers, the network is used as a “fabric” that allows nodes within a given cluster to behave as one: a supercomputer. Think of the network in an HPC data center as a “backplane” to which various compute and storage resources are attached.

When non-IT folks hear the term “supercomputer,” they typically visualize a massive building-sized single computer when in reality it’s many individual computers “glued together” by the network fabric. One can repeat this model with most any type of computer, even laptops. The “magic” is the network that allows many to behave as one. Thus, the need for the network to be as fast as possible. An important take away is to understand that in the world of HPC, there is no such thing as “fast enough.”

To Darren’s point, it was only a few years ago when 100-gigabit Ethernet was considered “fast,” The addition of GPUs to the HPC ecosystem has drastically changed the requirements for the network because they are able to “crunch numbers” many times faster than a CPU.

What makes NVIDIA so dominant in this space?

Darren: When you really think about it, NVIDIA helped create the space. There are others, don’t get me wrong. There are other players in this market, but NVIDIA is the one that grew it; they incubated it. They’ve been doing it for a long time, and everyone’s now playing catch-up with them.

There are hundreds of thousands of models out there now, open-source models that NVIDIA has been giving away. Nvidia encourages people to learn their technology, but more importantly, they are giving away these models so people can be creative and try other things with it, which increases their adoption.

George: That is a great point, Darren, and super important to understand: NVIDIA had vision well in advance of their competition. I love using the following analogy to explain:

Imagine a scene in a video game. The data that describes how the scene should look typically does not change. It is pre-loaded into the computer’s memory. However, every time the player takes a step, the same data must be processed again to generate the next frame. This creates the illusion of motion.

Now, imagine you have 10 years of stock price data for a given company. A data scientist working in the world of finance may want to run millions of “scenarios” against this data to identify the best parameters to use for future trades.

NVIDIA realized long before anyone else that the very same GPUs they dominate the video game industry with could be applied to this use case. Not only did they begin to design GPUs specifically for this kind of work, but they also built an enormous ecosystem of hardware, software, APIs, and reference architectures that is unlike any other.

Additionally, they acquired Mellanox because they knew they needed super-fast networking to tie it all together.

As a result, NVIDIA has made their products and solutions easily consumable with best-in-class network performance. Minimal effort is required for existing code that may have been written to run on conventional CPUs to take advantage of GPUs.

Why might customers not want Infiniband, and what would you recommend instead?

Darren: It depends; It depends. InfiniBand is a well-known networking component. People are using it. It’s the fundamental cornerstone of NVIDIA’s solution for their pod architecture. And Ethernet – George commented earlier about these Bluefield cards, right, these specialized network cards that get you beyond that 100-gig networking speed. That’s relatively new technology. And you get some people willing to experiment, but you have other individuals who are not going to. And there are other times when InfiniBand might be overkill for them. It always depends on what best suits the client’s requirements.

We recommend what’s best for the client and their solution. I’ve seen it where they’re starting so small, but you definitely see the need for a plan B. Perhaps the solution doesn’t have to include the InfiniBand networking initially, but maybe the solution will include InfiniBand cards with the servers as a plan B from a growth perspective. There are so many different ways to slice it, to have those conversations. It just depends on what best meets the client’s needs.

George: I agree, and just to add on to that as a solution architect, there are non-technical things we need to consider. Primarily, I like to get to know my client first. What kind of team is going to own it going forward? What are their skills like? Are they comfortable supporting InfiniBand? How will they secure the environment? Many things must be considered.

Additionally, InfiniBand equipment tends to have longer lead times. Some customers are not willing to wait.

Where does Ethernet fit in this discussion?

Darren: Again, the Ethernet discussion is whatever best fits the client. The BlueField Spectrum solution, which is called the DOCA framework, is a recently announced framework. It is so new to the conversation. I think there’s a lot of interest in it, but it’s just so new.

George: The biggest reason customers choose InfiniBand today and probably for the next few years at least is simply due to performance. Similarly to how GPUs were well suited for the way data is processed in HPC environments, so too is InfiniBand better suited for building a “fabric” that allows many computers to behave as one.

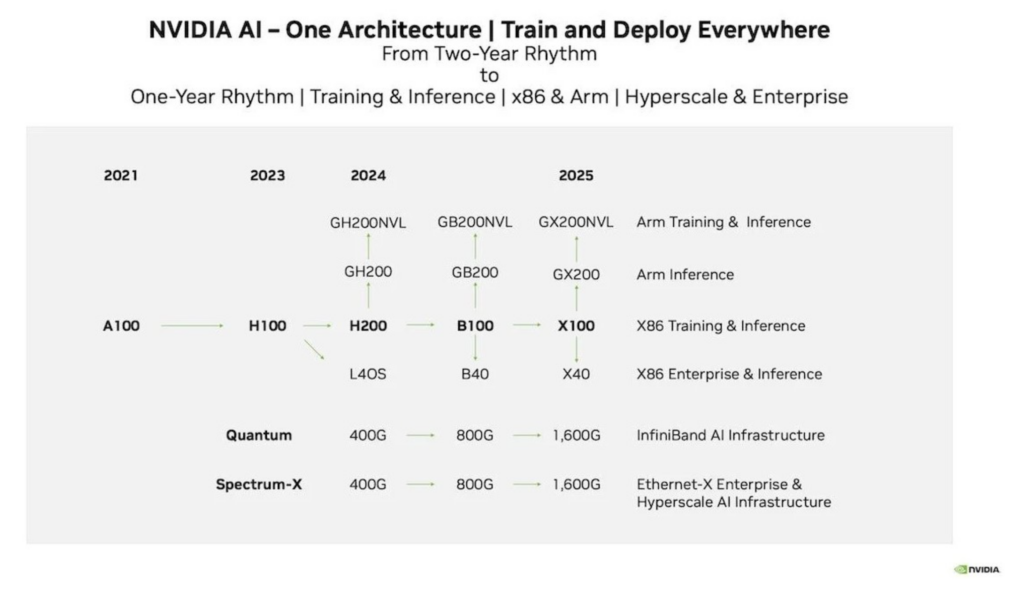

In terms of bandwidth, NVIDIA has consistently pushed InfiniBand speeds at least one generation ahead of Ethernet. For example, we are just now starting to see 800-Gbps Ethernet solutions when NVIDIA has been shipping 800-Gbps InfiniBand for a few years.

But it is more than just bandwidth, it is also latency. InfiniBand network interfaces do more “work” than Ethernet interfaces, which allows CPUs and GPUs to focus more on the workload itself rather than moving this data on and off the wire.

Secondly, the number two reason is the premier architecture for supercomputing right now is the NVIDIA SuperPod, based on the DGX pod architecture, and that is all InfiniBand. And so, when clients are looking to build their clusters based on that standard, they know that that is a standard that has been well vetted and is repeated over and over with very predictable outcomes. So, they do not stray from that architecture whether they are using Dell, Lenovo, HPE or NVIDIA’s own servers.

Darren: Or it just might be one or two nodes too, right?

George: Correct.

Darren: It just depends on the size.

George: But we cannot count Ethernet out just yet. There is a large group of top OEMs working to make Ethernet more competitive with InfiniBand called the Ultra Ethernet Consortium. I expect to see some significant improvements in the next few years that may change this conversation.

I do want to comment that I am in love with the Spectrum X switches. Darren, I have to geek out a little bit about NVIDIA’s Ethernet solutions too. NVIDIA is doing a few things that other OEMs are not.

First, they have a mechanism for flow control that is native to their switch fabric. When designing InfiniBand networks, most customers wish for a “non-blocking fabric,” which means that all nodes can always send and receive at full speed. NVIDIA’s Spectrum-X switches have a built-in flow control mechanism that allows Ethernet fabrics to achieve similar performance.

Second, NVIDIA gives the customer multiple choices for the kind of operating system they wish to run on Spectrum switches. Solutions from other OEMs typically only give you one choice, which is their native (and typically more expensive) operating system. One of the choices NVIDIA affords is called “Linux Switch,” which allows the customer to install any normal Linux distribution right on the switch!

Linux is king in the world of HPC, being able to run it right on your switch fabric creates limitless possibilities for network acceleration, security, monitoring and so on.

How important is next-gen data center sustainability, and why?

Darren: So, we’ve been talking about big clusters, InfiniBand – all these GPUs cause heat. The next generation of CPUs is coming out, and they are all producing more heat. The larger the cluster, the more power it needs, and power causes heat. Trying to air cool these types of environments is just simply not going to work going forward. It might not be next generation, but it’s definitely coming down the line. We’re seeing GPUs, memory, CPUs – they’re all causing heat. They need to be cooled, but the nice thing is if you start looking at the way to cool this gear, these data centers, you benefit because you’re not having to spend so much to cool the data centers when you’re isolating and cooling a CPU or GPU or memory. The other part of it is now people and organizations are starting to get a little bit more creative and they’re taking a real serious look at these environments that are under tremendous loads, and they must be creative. You just can’t keep throwing cooling at it, so people are having to figure out new and creative ways.

George: Heat is the primary enemy in the world of supercomputing. Modern CPUs and GPUs will run as fast as you can cool them, thus better cooling means better performance. To Darren’s point, we’ll see CPUs and GPUs in 2025 that run so hot they cannot be cooled properly with air alone.

So, it’s important to understand that HPC is not only changing the way we think about networks, but the way we design entire data centers. Not only do we have to properly cool the systems, but power must be upgraded as well. New PDUs, UPS systems, generators, even the racks, in some cases, must be changed.

The good news is that liquid cooling solutions are so much better that the cost savings in electricity typically pays for the upgrade. This will be the single biggest factor driving liquid cooling adoption and, thus, sustainability.

I live a little over an hour south of the fastest growing city in North America, and that’s Columbus, Ohio. Billions are being poured into the infrastructure there. Part of this funding is from the CHIPS Act and the new Intel facility being built nearby. As a result, enormous data centers are being built throughout the region.

One of the colo facilities being built is 130 acres, and they plan to offer up to 130 kilowatts of power per rack. We only have one nuclear power plant in Ohio, and it is 900 megawatts. This new facility will consume a third of that reactor by itself. The electric industry is struggling to manufacture transformers fast enough to keep up with construction projects like this.

We do not have enough electricity in the entire world to simultaneously turn on every CPU and GPU we have already made. And yet, we are manufacturing CPUs and GPUs as fast as we can, and they are far more power hungry than previous generations.

Describe some successes you’ve had designing and implementing networking environments to support AI workloads. What lessons have you learned?

Darren: We’ve had dozens and dozens of wins in the networking space: we’ve sold it, designed it, implemented it, upgraded it, grew it, and in many verticals, including military, government, academia, and healthcare. From small to large, from the networking all the way up to InfiniBand.

George: I agree with you, Darren. I see demand from customers in all industries, though healthcare is one of the biggest consumers to date. Everything from new drug discovery, genomics, and imaging, to solving cancer. The solutions vary slightly, but extremely fast networking is common to all of them. Understanding how to design, implement, validate, and grow these high-speed fabrics has been paramount to our success.

Darren: We’ve been talking lots about the compute piece of it. Storage is so huge and important to any AI conversation.

George: Hugely important.

Darren: Without data, there is no AI, there’s no training of models. The data, that’s storage, you know, the networking for storage. I mean our clients’ data is everywhere. It’s not just in one place. It’s everywhere. That networking, that transport of data, is so important from processing it, to feeding it, to outputting it.

George: Another important takeaway is a phrase that has been said throughout my career: Content is king. And that has not been any more true than it is with AI, because the more content you have – the more data you have – the better you can make your model.