Over the past several years, Ansible has become one of the top trending open-source projects, amassing over 20,000 contributors. With its growing popularity in the open-source world, Ansible has established itself as the most used configuration management tool by enterprises as of 2019.

Ansible’s growth over the years landed it in a tough position. The open-source project, hosted on GitHub, is the central repository where all developers submit code for their plugins and modules. After Red Hat acquired the Ansible project, they quickly became aware of how fast changes could be developed due to the amount of content being submitted to the project each day, which also resulted in an increase in the number of issues being discovered and reported.

Ansible Content Collections were introduced in Ansible release 2.8 to address these issues. The collections package management features allowed the Ansible team to separate the content that was part of the core product from the content that was created by the open-source community. This methodology allows contributors to package and distribute their content to other users by using a tar archive or downloading directly from the Ansible Galaxy repository.

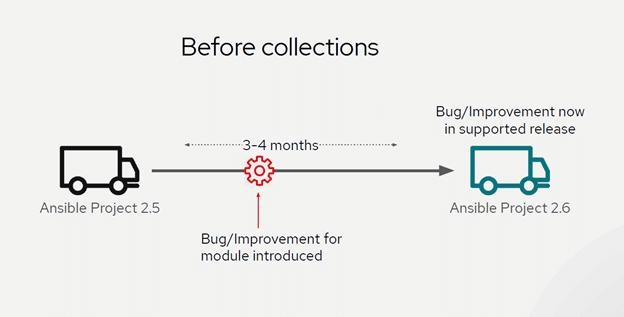

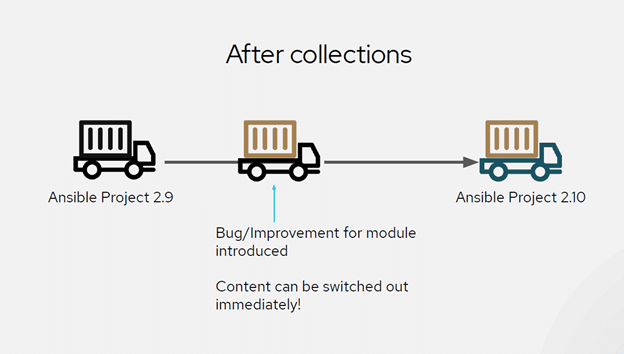

In Ansible Engine 2.8, this was simply a technical preview. In the 2.9 version, it became one of the standard ways to distribute content. In Ansible Engine 2.10, the open-source content will officially be separated from the core code base of Ansible. This will allow the Ansible dev team to increase the update rollout for the tool; waiting 3-4 months for a bug/improvement as was typically seen with Ansible in previous versions will no longer be an issue.

Red Hat has done many presentations on this topic and concept, and I have found that the easiest way of illustrating this transition is with two slides from their presentations.

Now, if you are interested in using some collections in your current Ansible 2.9 environment, you would be able to do this easily by following the steps provided by the collection you found on README.md file. Or, in the case that the collection does not contain documentation for the collection install, you can follow the detailed blog written by Ajay Chenampara from Red Hat at this link. However, in this blog, I want to explore how someone would go about packaging content that you create and making it available on Ansible Galaxy for the open-source community.

To showcase this, I created a basic module with a role and a playbook to accompany it. The module code I wrote for managing the realmd rpm package used for joining Identity Providers is far from perfect and should not be used without additional inspection in production environments. This blog is centered on some of the important requirements and considerations that must be addressed when creating your module and packaging content to Ansible Galaxy as a collection.

Developing an Ansible Module

When developing a module, there are some important questions to ask yourself: Will you keep to the standard and write the module with either Python or PowerShell, depending on what type of device you are trying to automate? Currently, if you want to contribute a module to Ansible and its repository, you need to write the module in Python along with any relevant code to support it. The only exception is if you want to write a module for Windows automation, in which case you would need to write the module in PowerShell.

You should also ask yourself this question: Will this be a module or a plugin? It is important to understand the differences here, as this will dictate how you will approach the writing of the actual code. You should develop a module when you want to create a standalone script that can be used by Ansible to provide a defined interface, accept arguments, and return information to Ansible in JSON output on a remote source. You should develop a plugin when you want to extend or augment Ansible’s core functionality. A plugin is able to transform data, log output, and connect to remote devices for your Ansible control host.

During one of my Ansible implementations, I noticed there was no easy way to join older RHEL servers to IDP using expect module due to Python library dependencies. Because of this, I decided to attempt to design my Ansible module to use realmd RPM package and pexpect Python library.

Addressing pre-requirements

Once you have decided to develop a module, the next step is picking an Ansible control host that you will be using to test your code. On this host, you will want to clone the version of Ansible you will be developing for. Typically, this will be the latest version available on GitHub for the Ansible project. Once the repository has been cloned, you should consider setting up a Python virtual environment to work in so you can isolate your Python versions as you tinker with your code. This way, you can be sure that it works on both Python2.7 and Python3.5+. The actual steps for these requirements, as well as a template of the module creation, can be found at this link.

Writing the Code

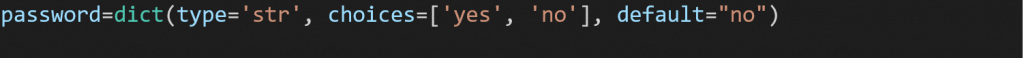

After copying the example code to a file where it will be modified, it is time to define the parameters of your soon-to-be Ansible module. When constructing the argument_spec variable, there are multiple rules you can assign for each of the parameters in your Ansible module to control the inputs from the user.

The variables above allow you to customize the functionality of each of the parameters:

- choices: This variable allows the user to only accept specific strings such as yes/no or present/absent.

- default: This variable allows you specify the default value for a parameter. This is one of the features you might have noticed in Ansible module documentation. Some of the modules will specify in the documentation that a specific parameter has a default value, such as the state argument for modules.

- type: This variable will specify what data type the parameter should be.

More examples of these can be found in my module code located in the plugins/modules directory in my collection repository. You can search for argument_spec in the file to quickly find the other parameters I created.

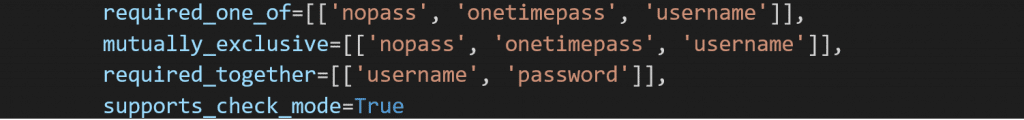

Once you have set up your argument_spec values for each of the parameters in your module, you can set more options to handle these arguments by specifying additional variables when creating your module class.

- required_one_of: Specifies a list of arguments where at least one of them needs to be selected.

- mutually_exclusive: Makes sure that some of the arguments can be specified if another argument is not.

- required_together: Makes sure if one argument is specified, another argument in the list must also be specified.

- supports_check_mode: This is set to either set to True or False, and lets Ansible know whether this module can go through its functions without changing the state of the machine if specified by the user to run the Ansible module in check mode.

Once you have set up the structure of your module, you should also follow the steps provided at the this link to set up a way for you to run your module through Ansible locally, if it’s in the scope for your module. Since my module was designed to run against Linux servers, this was not an issue for me, but if you are working on Windows automation, you would need to follow this link.

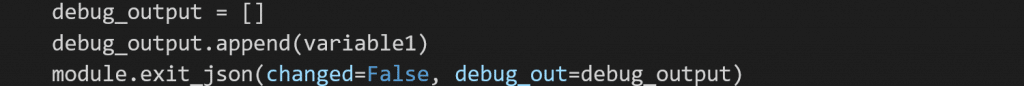

As with any code written for automation, you will need to debug it in order to help identify any issues. Keep in mind your module code is not the final code that will be sent to remote devices. Ansible takes the code you’ve written and constructs a new Python script that imports over 1000 lines on top of your code. To troubleshoot the issues I was seeing, I created breaks using the module class to output variables I set in Python as Ansible output when executing my module from Ansible Engine.

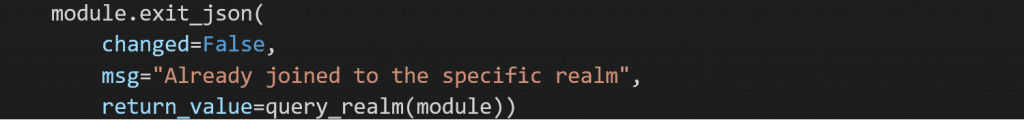

Finally, you need a way for your module to send Ansible return data from the execution. For this, use the method exit_json and specify whether the module has changed, stayed the same, or failed.

Next Steps

Once you have completed the module and all its exit scenarios, it is time to document the module by providing all the same content you see for any module documentation on https://docs.ansible.com/ansible/latest/modules/ including: Synopsis, Parameters, Notes, Examples, and Return Values. To see an example of this, you can either reference my module code at the top of the Python file or see the extra documentation located here.

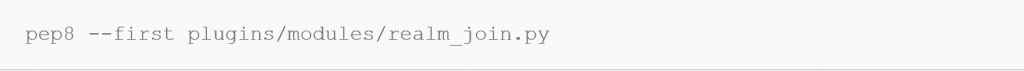

Once you have tested your module on the devices you want to automate, you are now ready to do the final checks before submitting the module to Galaxy. This checklist is provided at the this link, but some of the most important checks are making sure the module supports Python2.7 and Python3.5 using proper Python3 syntax. The code should also follow PEP8 Python style conventions. To accomplish this for my module, I used the PEP8 Python library from the following location: https://pypi.org/project/pep8/.

The next step is to license your module. The way I was able to generate a license for my collection was by creating a license in my GitHub repo. GitHub makes it easy to generate licenses for repositories. To create a license, make a new file at the root of your repository called “LICENSE” or “LICENSE.MD”. You will then see a dropdown where you can select some public licenses such as GNU, Apache, and MIT. To be able to contribute a module to Ansible, you should license your module under the GPL License v3 or higher. As seen in my collection, the LICENSE file contains all the information regarding the license and what it covers.

Collections file structure

To demonstrate how to create different content within a collection, I also created a playbook that invoked a role I wrote. The role, which was also in a separate directory from the playbook, is still within the collection structure. This role can be used for installing RPM package dependencies based on which IDP (IPA/AD) the user was attempting to set up a connection to.

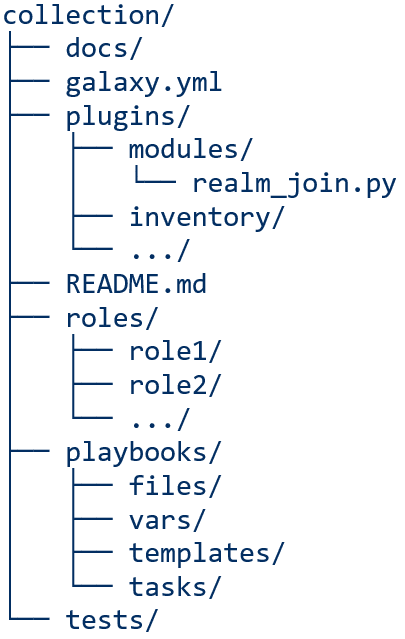

The folder structure should look similar to this diagram:

The important directories within my collection that had to be created were plugins, roles, and playbooks. Within the plugins directory, you will need to create another directory for modules. To begin, place your created module in that location. As this can be a lot of manual work, ansible-galaxy command allows you run “ansible-galaxy collection init my_namespace.my_collection”, which will create all of the directories for you.

Before the new Galaxy collection on your file system is published to the upstream repository and made available to the public, some additional information needs to be provided. You will need to update the README.md file to provide documentation on how to install and use the collection on the imported system. Additionally, a galaxy.yml file is required for providing information that will be used in Ansible Galaxy such as your license, description, and the tags you want to associate with your collection. All of these attributes will help others find your content when searching Galaxy. You can find more information about the structure of galaxy.yml at this link.

If you have created a role, you will also need to add a meta directory. The meta directory should contain a main.yml file that you will need to provide information for in a similar fashion to what you had to put in the galaxy.yml file. You can review the values needed at this link, or you can reference my/roles/realm_config/meta/main.yml file.

Finally, to upload your collection to Galaxy, go to the root directory of your collection. From there, run:

collection_dir#> ansible-galaxy collection build

This will generate a tar archive in the same directory ran the command in. This tar archive can now be used to either install your collection on another Ansible host or be used to upload to Ansible Galaxy. To upload to Ansible Galaxy, run:

ansible-galaxy collection publish path/to/my_namespace-my_collection-1.0.0.tar.gz

To try the collection locally:

ansible-galaxy collection install my_namespace-my_collection-1.0.0.tar.gz -p ./collections

**NOTE**

For the ansible-galaxy commands to work, you will need to be logged in to Ansible Galaxy on that host. In order to do that, run:

collection_dir#> ansible-galaxy login

Expanding automation use cases

Ansible collections are making packaging a lot more scalable for long-term use. Although the collections package management update will be an adjustment for many teams, this solution should enable Ansible to expand automation use cases by leveraging this development method.