Building a data protection program doesn’t happen overnight, and the frequently used comparison to a journey is accurate, with lots of ground covered before reaching maturity. So, what’s the best way to get started? Determining which direction to go. An interesting...

Blogs

Networking Is the Backbone of AI: A Conversation on Networking Considerations for Artificial Intelligence

I recently sat down with Converge’s Vice President of Engineered Solutions Darren Livingston and Team Lead for HPC Solution Architecture George Shearer to discuss how the rise of artificial intelligence (AI) is impacting our clients’ needs around networking...

NIST CSF 2.0 Gains Ground as Universal Cybersecurity Framework

As consultants on the Governance, Risk, and Compliance team at Converge, we’re often contacted by clients after every major cybersecurity or data breach incident hits the news. Their common question is, “Can this happen to us, and how can we be proactive?” They want...

Unleashing the Power of the Cloud: Beyond a Migration

The pace of technology innovation is driving organizations, large and small, to continually seek ways to stay ahead of the competition and remain agile. One key transformation reshaping technology across the globe is the migration of workloads to the public cloud....

Bridging the Gap Between Infrastructure and Artificial Intelligence

I sat down with Converge’s Vice President of Engineered Solutions Darren Livingston and Senior Director of AI and AppDev Dr. Jonathan Gough to discuss how they’ve managed to bridge the gap between infrastructure and artificial intelligence (AI) when designing and...

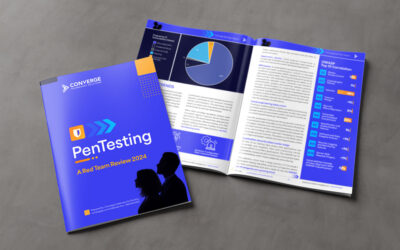

Inside the 2024 Red Team Penetration Testing Report

In the unending barrage of cyber attacks, keeping pace with current threats is paramount. A proactive approach that includes penetration testing raises the bar higher, finding exploitable weaknesses before attackers can exploit them. Our report condenses 12 months of...

Leadership Perspectives: A Q&A with Vic Verola, EVP of North American Sales

What led you to pursue a career in sales? I started my career as an engineer and was doing very well. I was generally consultative as an engineer and enjoyed client interaction. My father, who has always been in sales, influenced me heavily to pursue a career in sales...

The Sustainability Question

Alright, I’ll admit it – I enjoy my job. I find every day exciting and see each moment as an opportunity, although I know I’m lucky to admit such a secret, as not everyone has the chance to feel the same way I do. However, I will also admit that getting to this...